An investigation of execution timeout with go-mysql

This is a real case in my recent work with go-mysql client. In this scenario, there exits a MySQL connection pool(which maintains multiple database connections) that keeps writing transactions to peer databases, such as a MySQL or TiDB. Each transaction contains several insert, update or delete DMLs, the DML count ranges from 40 to 128. In order to reduce round trip between MySQL client and MySQL server, we adopt the multiple statements in transaction, which conbines multiple DMLs in a single SQL statement. A sample code snippet with go-sql-driver is as follows.

1 | // normal transaction |

1 | // use multiple statements |

After the we change from common transaction to multiple statements SQL, we benchmark the write performance in two different environments, the main difference is that one is X86 architecture physical machine, and the other one is an ARM architecture cluster. To our surprise in the ARM cluster write throughput has 140%-180% regression and we meet many network packet errors from sql driver, while in the X86 cluster there is no regression. From logs we can observed tx.ExecContext() was blocked and timeout after 2 minutes, the underlying timeout happens in sql driver and the read from TCP connection.

[2023/03/22 19:25:45.304 +08:00] [ERROR] [packets.go:37] [“read tcp 10.233.80.156:42700->10.109.103.19:4000: i/o timeout”] [component=”[mysql]”]

[2023/03/22 19:25:45.305 +08:00] [WARN] [mysql.go:819] [“execute DMLs with error, retry later”] [error=”MySQL txn error: invalid connection”] [duration=2m0.039613308s] [query=”…”] [count=78]

The invalid connection error is very common, it can be caused by many reasons. For example if the client connection is idle for a time longer than conn-max-life-time in MySQL server, the peer MySQL will close the connection, and once the client writes data in this connection, invalid connection error will raise. To verify whether this is the cause, I checked the *DB.SetConnMaxLifetime in client side and wait_timeout variables in MySQL server side, they are both with proper value. Besides there isn’t any connection disconnecting log in MySQL server side. I have two possible suspicions here that causes write timeout in MySQL client

- One is there isn’t any data written to MySQL server, and the data is blocked somewhere such as the network send buffer.

- The other one is the transaction has been sent to MySQL and MySQL executes the query successfully but the response can’t be received by client side for some reasons.

Besides I checked kernel diagnostic messages via dmesg -T and founnd many following errors.

1 | [Wed Mar 22 21:40:41 2023] hns3 0000:bd:00.0 enp189s0f0: L3/L4 error pkt |

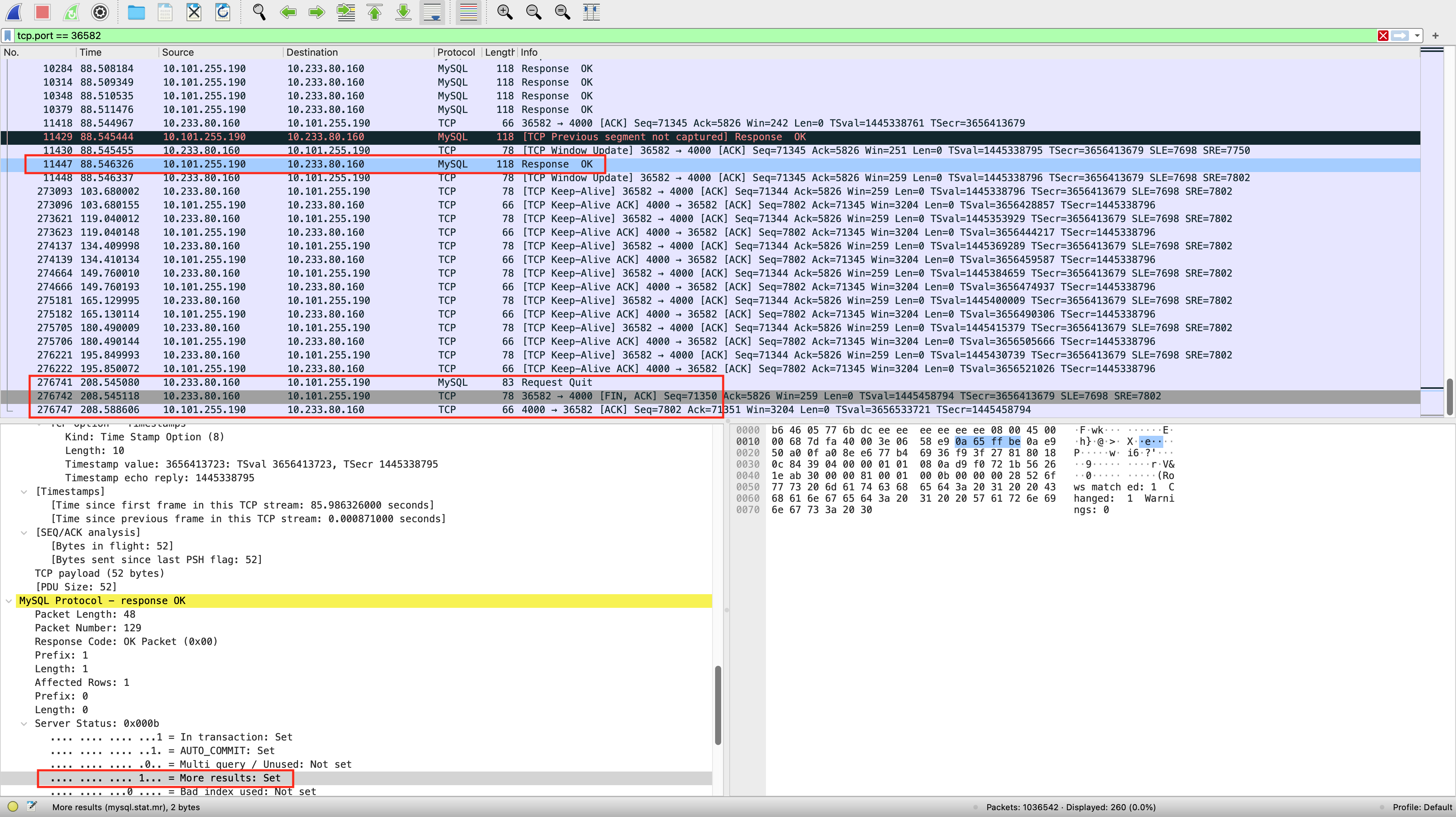

The error message suggests that there was an issue with the packet’s routing or delivery at the network or transport layer. So I captured the TCP package with tcpdump and observed it with wireshark. First I query packets with tcp.flags.fin == 1 to find out the port on disconnect connections. Then I picked one of the ports tcp.port == 36582 and observed its behavior.

- Based on the data, Number-11447 is the last MySQL response OK packet received by client on port 36582, and the data packet has

More results = 1set by MySQL, indicating that there should be more response OK packets to follow. - However, within the 120 seconds timeout period, the client side did not receive any more response OK packets.

- This phenomenon is consistent with MySQL server not having the “write: broken pipe” log, suggesting that MySQL sent the response data but lost it at the network layer. Therefore, MySQL network driver on the client side is blocked at the read packet call.

At this point, I believe the error was caused by the NIC driver. About several weeks later, after the NIC driver is upgraded by technical support of hardware vendors, this error disappears and everything is back to normal.